As the number of parameters in Large Language Models (LLMs) reaches trillions, training them becomes extremely challenging. Traditional data centre network designs, such as the "Rail-optimized" architecture based on Clos networks, provide any-to-any connectivity, but are inefficient and costly when dealing with large-scale LLM training.

MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), in collaboration with Meta Platforms, has come up with a revolutionary idea, Rail-only network architecture, which removes the switch Spine layer, a design that can maintain the same training performance while reducing network costs compared to traditional GPU data centre, the network cost is reduced by 38% to 77% and the network power consumption is reduced by 37% to 75%.

contexts

Clos networks are an efficient network architecture design that enables full connectivity between nodes (e.g., GPUs, DPUs, etc.) within a data centre, allowing any node to communicate with all other nodes. However, Clos networks are not the only way to achieve all-to-all connectivity. In current supercomputing centres, the Dragonfly topology is another common option (e.g. Google Aquila).

Dragonfly topology has its own unique advantages, but it also has some significant drawbacks. Specifically, when new machines need to be added to the network, the Dragonfly topology typically requires reconfiguring and reconnecting the entire network, which adds both complexity and cost to the operation. In contrast, the Clos network enables nodes to be added and connected more easily.

However, the Clos network is not perfect. Compared to the Dragonfly network, the Clos network may be slightly less effective at providing consistent latency across networks. Overall, there are pros and cons to both approaches.

It is well known that large AI training systems require about 24,000 to 32,000 GPUs to train large models with trillions of parameters in a relatively timely manner.

There are currently 24,576 GPUs in the Meta Platforms system used to train its Llama 3.1 405B model, and it is expected that the next generation model will span 32,768 GPUs in a single cluster.

The Clos network is based on Ethernet Leaf and Spine switches, all of which support Remote Direct Memory Access (RDMA), so GPUs can use this all-to-al topology to share data with all other GPUs in the network simultaneously.

Building a high-bandwidth Clos network to connect more than 32,000 GPUs would cost $153 million, and the entire network itself would consume 4.7 megawatts of power.

The paper claims that a Clos fabric connecting 30,000 GPUs using 400 Gb/sec links would cost $200 million, which is much more than any hyperscale and cloud provider would spend to connect 4,096 server nodes.

The figure below shows the interaction of network cost and network capacity as the AI cluster scales:

When the number of GPUs doubles to 65,536, the cost of the network at 400 Gb/s port speeds will be $300 million and will consume about 6 megawatts of power.

When the number of GPUs doubles to 65,536, the cost of the network at 400 Gb/s port speeds will be $300 million and will consume about 6 megawatts of power.

Intra-platform connectivity: high bandwidth domains (HB domains)

The proliferation of resource-intensive machine learning workloads has led to the mainstreaming of GPU-centric platforms that are optimised to handle multi-GPU loads.

These platforms integrate high-bandwidth local interconnect technology within the GPU's local region to meet the growing demand for communications.

Although platforms from different manufacturers (such as Nvidia and AMD) vary in terms of computational performance (FLOP), GPU and CPU architectures, and interconnect technologies, they all share one core characteristic:That is, it achieves an amazing internal bandwidth of Tbps between GPUs.

As an example, Nvidia's DGX H100 server integrates eight H100 GPUs connected via NVSwitches, enabling 7.2 Tbps of non-blocking internal bandwidth.

The recently released GB200 NVL72 computer goes even further, connecting 36 GB200 superchips in a rack via fifth-generation NVLink technology at 14.4 Tbps per GPU.

AMD's MI300X platform, on the other hand, uses theInfinity Fabrictechnology, connecting eight MI300X accelerators in a full-mesh topology, each GPU also enjoys 7.2 Tbps of bandwidth. In addition, a supercomputer such as Nvidia's DGX GH200 scales the platform to 256 GPUs with a multi-layer NVSwitch topology, while maintaining 7.2 Tbps of fully bisected intra-GPU bandwidth.

These platforms with Tbps internal bandwidth are collectively referred to as "High Bandwidth (HB) domains" and the corresponding interconnect technology is called HB Interconnect (HBI).

Cross-platform connectivity: NIC domains

However, even though GPU-centric platforms have made significant gains in internal bandwidth, they are still limited in their ability to scale beyond a single platform. For this reason, carriers have adopted Ethernet orInfinibandNICs of different platforms are connected by traditional networking technologies, forming the so-called "NIC domain". In NIC domains, Rail-optimised networks are widely used as an advanced interconnection architecture, especially in the field of high-performance computing (HPC).

While the Rail optimised network is better suited for DNN training compared to traditional CPU-centric datacentre networks, it focuses primarily on HPC loads and fails to exploit the full potential of the unique traffic patterns of LLM training workloads.

Traditional data centre designs, such as the Clos network, focus on handling unpredictable and bursty CPU-intensive workloads, supporting multiple application scenarios by providing arbitrary connections between servers.

The Rail-optimised network used for GPU training clusters has evolved from this data centre Clos network and is optimised for GPU load characteristics.In a Rail-optimised network, GPUs with the same local rank are assigned to switches under the same Rail (Rail switches) to minimise latency.

This layout takes advantage of the highly localised nature of DNN training traffic.

However, while the Rail-optimised network performs well in reducing local communication latency, it still relies on the Spine switch layer to connect the individual Rail switches, forming a fully dichotomous Clos network topology.

This design ensures that GPUs in different HB domains can communicate at hundreds of Gbps. But it's worth pondering whether Spine switches are really necessary. Analysis of LLM transmission modes

Analysis of LLM transmission modes

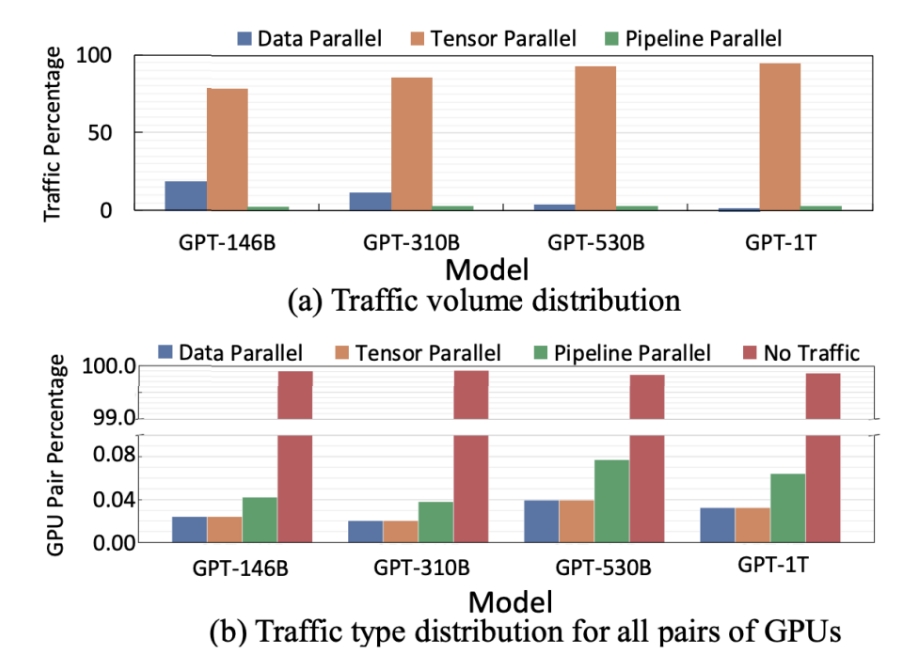

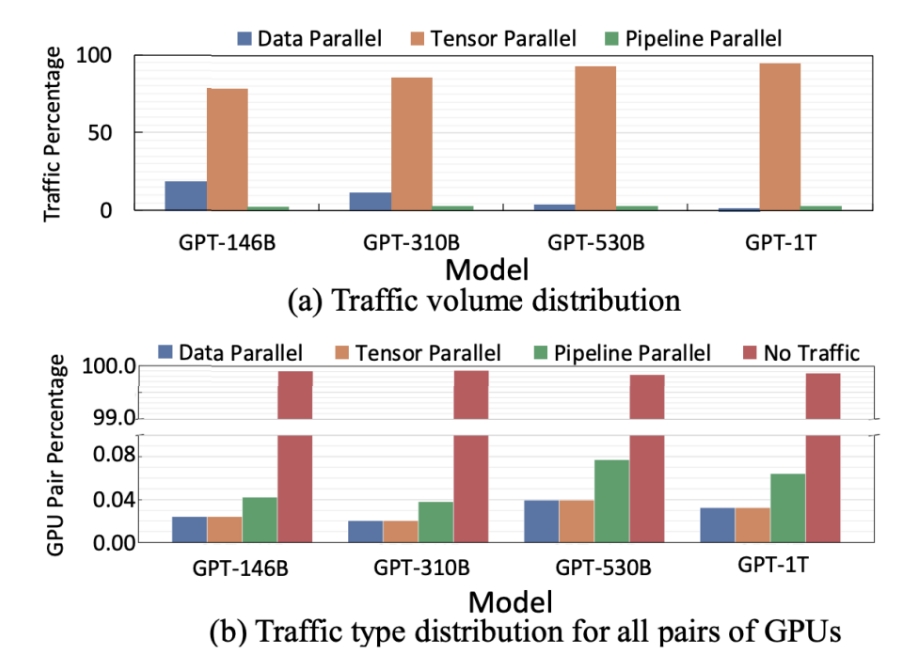

By referring to the GPT model in the MegatronLM paper, the parallelisation strategy and traffic characteristics of the model with different parameter sizes on a cluster of up to 3072 GPUs and 384 DGX A100 servers are investigated. The following is a further in-depth analysis of the traffic patterns of the Megatron GPT-1T model:

The following is a further in-depth analysis of the traffic patterns of the Megatron GPT-1T model: It is found that Tensor Parallelism (TP) traffic dominates and occurs mainly within the HB domain, while Data Parallelism (DP) and Pipeline Parallelism (PP) traffic is less frequent and is mainly transmitted within the tracks within the NIC domain.

It is found that Tensor Parallelism (TP) traffic dominates and occurs mainly within the HB domain, while Data Parallelism (DP) and Pipeline Parallelism (PP) traffic is less frequent and is mainly transmitted within the tracks within the NIC domain.

Traffic is dense in the HB domain and low and largely confined to the tracks in the NIC domain.

In addition, for LLM at the MoE layer, the traffic pattern behaves as all-to-all traffic, but again can be optimised by sensible GPU mapping and layered aggregate communication algorithms.

Whether it's TP, DP or PP, traffic rarely goes to those Spine switches. So all you can do is chop off the head of the network and get rid of the Spine aggregation switches altogether!

Rail-only network design

Compared to traditional Rail-optimised GPU clusters, the Rail-only network retains the HB domain and Rail switch, but subtly removes the Spine switch.

This change ensures that the bandwidth between GPU pairs within the same network remains constant, while enabling the streamlining and cost reduction of network fabrics.

Specifically, by removing the Spine switches and reconfiguring the links between the Rail switches and the GPUs, we constructed a dedicated and independent Clos network with each Rail operating independently. Since the Rail switches have spare downlink ports to connect directly to the GPUs, the Rail-only design significantly reduces the number of switches required compared to a Rail-optimised network, thus lowering the overall network cost. In Rail-only networks, direct connectivity between different HB domains is removed, but data can still be communicated across domains through forwarding within HB domains.

In Rail-only networks, direct connectivity between different HB domains is removed, but data can still be communicated across domains through forwarding within HB domains.

For example, when GPU 1 (Domain 1) sends a message to GPU 2 (Domain 2) in the above figure, it first arrives at one of the GPUs in Domain 2 through the first HB domain, and is then transmitted through the network to its final destination. Although this routing method may trigger a bandwidth tax (i.e., an increase in network traffic due to forwarding), the research in this paper shows that this performance degradation is almost negligible due to the bandwidth asymmetry between the HB domains and the NIC domains.

Compare the performance of Rail-only networks with traditional Rail-optimised networks.

The results show that for LLMs with trillions of parameters, the Rail-only network significantly reduces the network cost and power consumption while maintaining the same training performance. Specifically, the network cost is reduced by 38% to 77% and the power consumption is reduced by 37% to 75%.