Load balancing (Load Balancing) is a technique for spreading network requests or services across multiple servers or network devices to improve performance, reliability, and scalability.

Through load balancing, a large amount of concurrent access or data traffic can be spread over multiple operating units for processing, allowing each operating unit to receive a reasonable amount of workload, thus avoiding overloading individual servers.

The principle of load balancing can be summarised as "spread the requests and centralise the processing".

Specifically, when a client makes a request, the load balancer distributes the request to one or more servers on the backend based on predefined algorithms and policies. These servers can be physical servers, virtual machines or containers.

A load balancer is usually deployed at the front end of the network architecture to act as an intermediary between clients and servers. It is responsible for listening to client requests and selecting appropriate servers to handle those requests based on a load balancing algorithm.

Once the server has processed the request and generated a response, the load balancer returns the response to the client. The load balancing algorithm is the heart of the load balancer and determines how requests are distributed to individual servers.

Common load balancing algorithms include Round Robin, Least Connections, Hash, and so on. Each of these algorithms has its own characteristics and is suitable for different application scenarios.

Load balancing techniques offer a variety of benefits, including improving performance, enhancing reliability, and enabling scalability.

By spreading requests across multiple servers, load balancing can significantly improve overall system performance, avoid single points of failure, and improve system availability and stability. In addition, load balancing allows for seamless scaling; when the system needs to handle more requests, simply add more servers.

Load balancing is a technique that spreads network requests or services across multiple servers, improving system performance, reliability and scalability by spreading out requests and centralising processing.

The principle lies in the use of load balancers as an intermediary between clients and servers, distributing requests to appropriate servers for processing according to preset algorithms and policies. With the continuous development of cloud computing and big data technology, load balancing will continue to play an important role in network architecture, providing a strong guarantee for modern network services.

Load Balancing Types

Load balancers can be roughly divided into 3 categories, including:

DNS-based load balancing, hardware load balancing, and software load balancing.

DNS for load balancing

DNS is the most basic and simple way to implement load balancing.

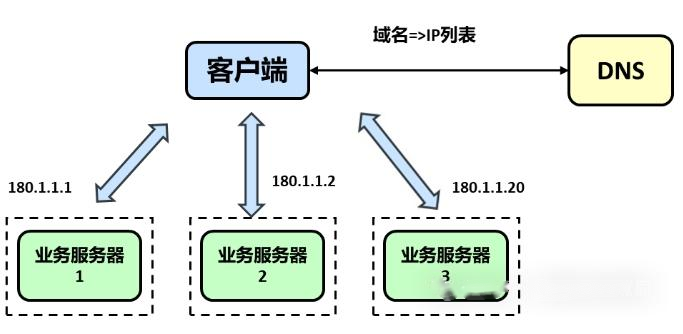

A domain name is resolved to multiple IPs through DNS, and each IP corresponds to a different server instance. This completes the scheduling of traffic, and although it does not use a regular load balancer, it achieves a simple load balancing function.

The biggest advantage of load balancing via DNS is that it is simple to implement, low cost, and you don't need to develop or maintain your own load balancing device, but there are somedrawbacks::

- Server failover delay is large, and server upgrade is inconvenient. We know that there are layers of caching between DNS and users. Even if the faulty server is modified or removed by DNS in time when the failure occurs, but the middle passes through the operator's DNS cache, and the cache is very likely not to follow the TTL rules, which results in the DNS taking effect in time to become very slow, and sometimes a day later there is still a little bit of request traffic.

- Traffic scheduling is uneven and too granular.The balance of DNS scheduling is affected by the policy of the regional operator LocalDNS to return IP lists, and some operators do not poll to return multiple different IP addresses. In addition, the number of subscribers served by an operator's LocalDNS can be a significant factor in uneven traffic scheduling.

- The traffic allocation strategy is too simple and supports too few algorithms.DNS generally supports only the polling method of rr, the traffic allocation strategy is relatively simple and does not support scheduling algorithms such as weights and hash.

- The IP list supported by DNS is limited. We know that DNS uses UDP messages for information transfer, and the size of each UDP message is limited by the MTU of the link, so the number of IP addresses stored in the message is also very limited, Ali DNS system supports 10 different IP addresses for the same domain name.

In practice, this approach to load balancing is rarely used in production environments because of the obvious drawbacks. The reason for describing the DNS load balancing approach in this article is to explain the concept of load balancing more clearly.

Companies like BAT generally use DNS to achieve global load balancing at the geographic level, to achieve proximity access and improve access speed, this approach is generally the basic load balancing of the entrance traffic, the lower layer will have more professional load balancing equipment to achieve the load architecture.

hardware load balancing

Hardware load balancing is a dedicated load balancing device that implements the load balancing function through a special hardware device. There are two typical hardware load balancing devices in the industry: F5 and A10.

This type of equipment is strong and powerful, but the price is very expensive, usually only the tycoon company will use this type of equipment, small and medium-sized companies generally can not afford, the business is not so large, with these devices is also quite a waste.

Advantages of hardware load balancing:

- Powerful: Comprehensive support for load balancing at all levels and comprehensive load balancing algorithms.

- Powerful performance: performance far exceeds that of common software load balancers.

- High stability: Commercial hardware load balancing, well tested rigorously and used on a large scale, is highly stable.

- Security: It also has security features such as firewall, anti-DDoS attack, and SNAT support.

The disadvantages of hardware load balancing are also obvious:

- Pricey;

- Poor scalability and inability to expand and customise;

- Commissioning and maintenance are cumbersome and require specialised personnel;

Software Load Balancing

Software load balancing, which allows you to run load balancing software on an ordinary server to achieve the load balancing function.

Currently the common ones are Nginx, HAproxy, LVS.

One of the differences:

- Nginx: Seven-tier load balancing with support for HTTP, E-mail protocols, and also 4-tier load balancing;

- HAproxy: Supports seven layers of rules and performs very well. the default load balancing software used by OpenStack is HAproxy;

- LVS: Running in the kernel state, the performance is the highest in software load balancing, strictly speaking, working in three layers, so a little more general, applicable to a variety of application services.

software load balancingvantage::

- Easy to operate: both deployment and maintenance are relatively simple;

- Cheap: Only the cost of the server is required and the software is free;

- Flexibility: Layer 4 and Layer 7 load balancing can be selected based on business characteristics, making it easy to expand and customise functionality.

Load Balancing LVS

Software load balancing includes Nginx, HAproxy, and LVS, all of which are commonly used. LVS is basically used for Layer 4 load balancing, and it is known that large companies such as BAT are heavy users of LVS because of its excellent performance, which saves the company huge costs.

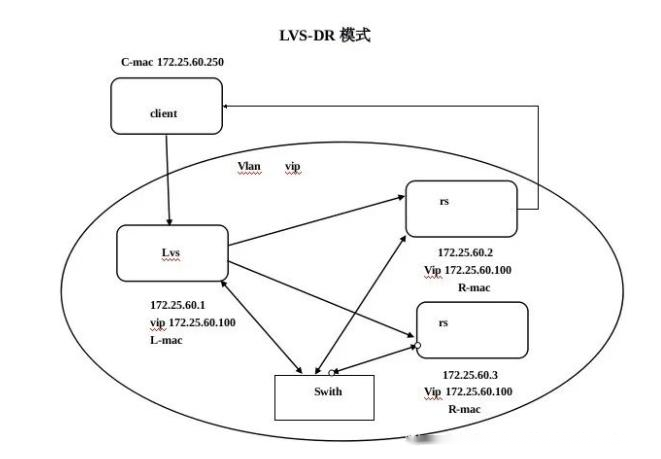

LVS, full name Linux Virtual Server is an open source project initiated by Chinese Dr Zhang Wensong, which has great popularity in the community and is a four-layer, powerful reverse proxy server.

It is now part of a standard kernel that is characterised by reliability, high performance, scalability and operability, resulting in optimal performance at a low cost.

Netfilter Basics

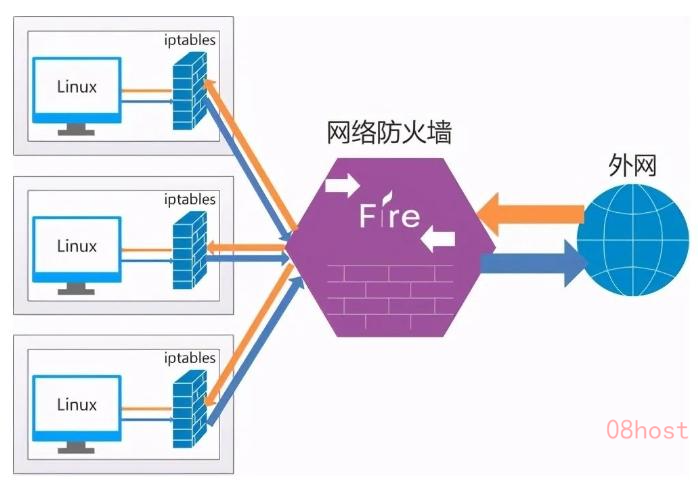

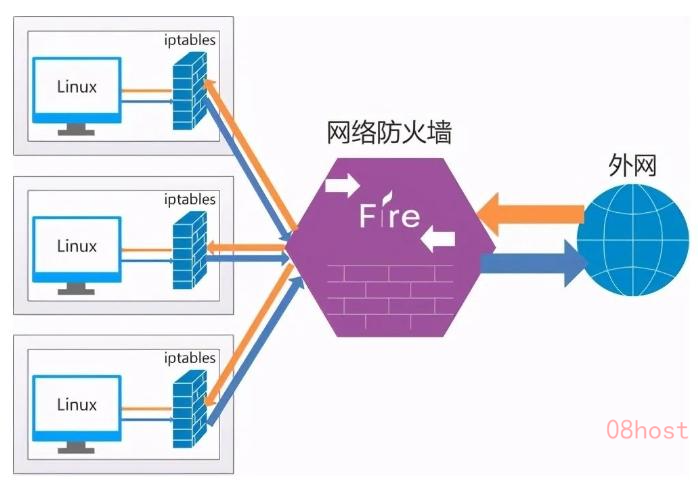

LVS is based on the netfilter framework in the Linux kernel to achieve the load balancing function, so to learn LVS before you must first briefly understand the basic working principle of netfilter. netfilter is in fact very complex, usually we say that the Linux firewall is netfilter, but we usually operate is iptables, iptables is just a tool to write and pass rules in user space. The netfilter is actually very complex, usually we say Linux firewall is netfilter, but we usually operate iptables, iptables is just a user space to write and pass the rules of the tool, the real work is netfilter. through the following figure can be a simple understanding of the netfilter's working mechanism:

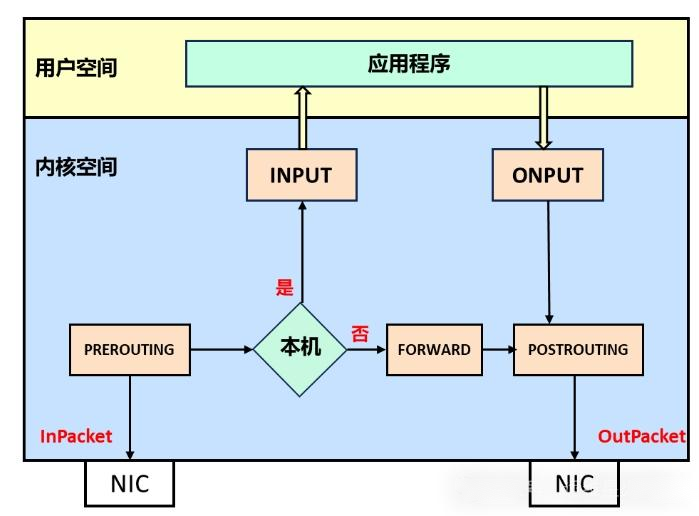

netfilter is a kernel-based firewall mechanism for Linux that serves as a generic, abstract framework that provides a set of hook functions to manage mechanisms that provide features such as packet filtering, network address translation, and connection tracking based on protocol type.

Generally speaking, netfilter provides a mechanism to set a number of levels (hook functions) according to the rules to perform the relevant operations in the packet flow through the process. netfilter sets a total of 5 points, including: PREROUTING, INPUT, FORWARD, OUTPUT and POSTROUTING.

- PREROUTING : Packets that have just entered the network layer and have not yet been routed are routed through here.

- INPUT : Determine the packet destined for the local machine through route lookup, through here

- FORWARD : Packets to be forwarded after route lookup, before POST_ROUTING

- OUTPUT : Packets just sent from the local process through here.

- POSTROUTING : Packets entering the network layer that have been routed, determined to be forwarded, and are about to leave the device are routed here.

When a packet enters the network card, after the link layer into the network layer will reach the PREROUTING, and then according to the target IP address for routing, if the target IP is the local machine, the packet continues to be passed to the INPUT, after the protocol stack according to the port will be sent to the appropriate application.

The application processes the request and sends the response packet to the OUTPUT, which is eventually sent out to the card after POSTROUTING.

If the destination IP is not local and the server has forward parameter turned on, the packet will be delivered to the FORWARD and finally sent out to the NIC after POSTROUTING.